e-Rater: An unjust grading system

August 25, 2017

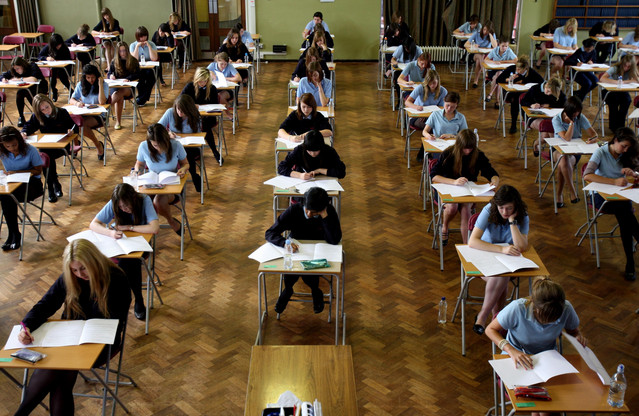

Millions of students every year take standardized tests in hopes of reaching their target score that will earn them admission to their dream colleges.

Recently, nine companies developed automated essay graders, all capable of grading thousands of essays in just seconds. However, only one allowed researchers to test their product. Educational Testing Service, a company that administers millions of tests every year, has developed e-Rater, a robot created in hopes of being implemented for the SAT, AP tests and many other high pressure tests.

Initially, the e-Rater was seen as a groundbreaking, more efficient and accurate way to grade the millions of essays that students write for standardized tests. However, those advantages are minor compared to the long list of flaws in having a lifeless machine doing grading.

Aside from grammar and spelling, essay grading is a subjective process. However, robo-readers such as the e-Rater can only grade objectively; they are not capable of judging more subjective aspects of the essay, such as fluidity, style and structure.

As a result of having such rigid criteria for what makes an essay good or bad, a formula on how to beat these machines has been found: long sentences, grammar, long paragraphs, and a higher level of word choice. If these four criteria are met, the essay is given a top score, regardless of accuracy.

Les Perelman, director of writing at MIT, studied the algorithm of e-Rater to find out what exactly the robot considers as a high scoring essay. Perelman found that the e-Rater takes every statement to be factually correct. Students can essentially throw in any fact, fabricated or not, and the machine will agree with it.

Perelman also found that quantity trumps quality. An essay with over 700 words filled with senseless sentences was given a higher score than a stronger, better developed essay with just over 500 words.

This is largely unfair for students that have spent time putting effort into their essay and developing their writing skills. Although one student’s essay can be well developed, strongly supported and filled with substance, another essay with mindless filler words and sentences with poor organization and development can be scored higher purely because the robo-reader is biased toward a lengthier essay.

If the entire purpose of standardized tests is to determine students’ eligibility for college admission, it seems counterintuitive to use a machine that grades essays solely on length and word choice over substance and writing ability.

As of now, only a human grader is capable of determining a student’s writing ability as whole, and teaching students how to beat a machine should not be replacing the development of quality writing skills.